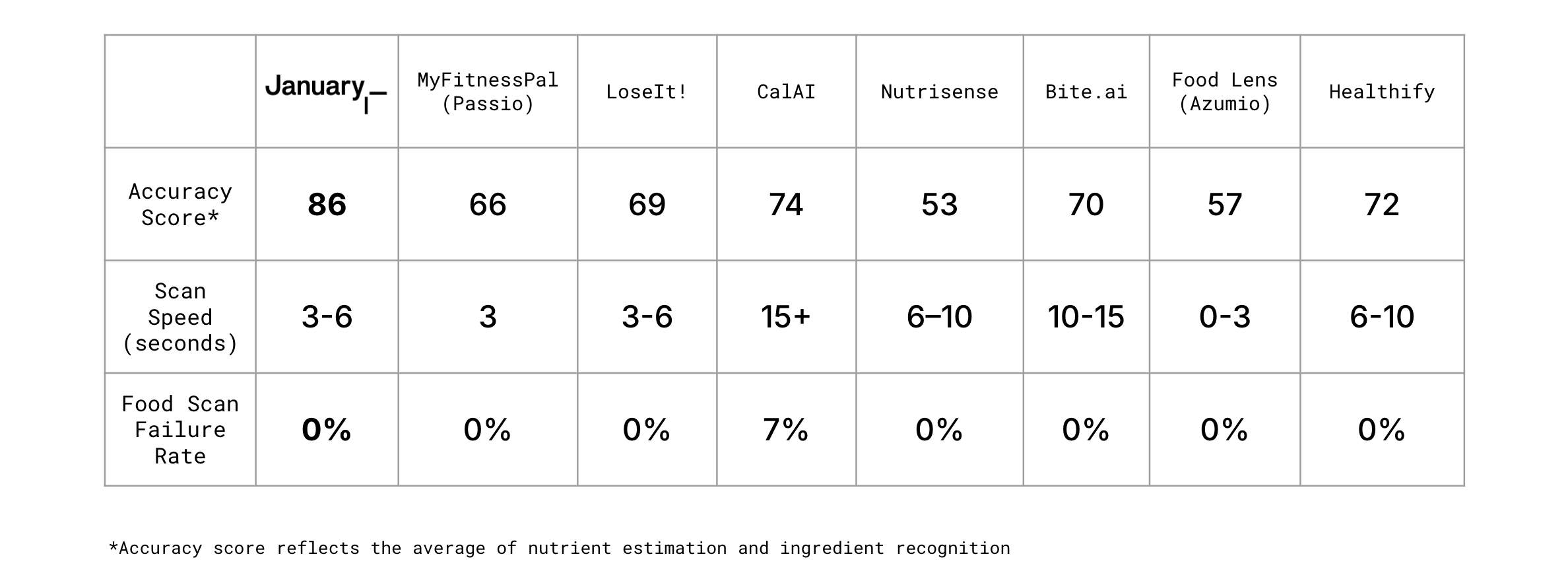

January’s photo scanner is the best on the market, bar none. Boasting an accuracy score of 86% across ingredient recognition and macronutrient estimation, it beats the competition by more than 12 accuracy points.

.gif)

Image Dataset: We selected a representative set of food photos with known nutritional data ("ground truth") from reputable datasets (Nutrition5k, SNAPMe, etc.)

Testing Procedure: Ran each photo twice through each app’s photo scan to minimize anomalies, selected the best of two results, measured processing time, and marked a test as failed if both attempts were unsuccessful

Ingredient Accuracy: Calculated as the percentage of correctly identified ingredients compared to the total ingredients in the ground truth

Macro Accuracy: Calculated by measuring each nutritional macro's Mean Absolute Percentage Error (MAPE) against ground truth values, then averaging these MAPEs to generate an overall macro accuracy score

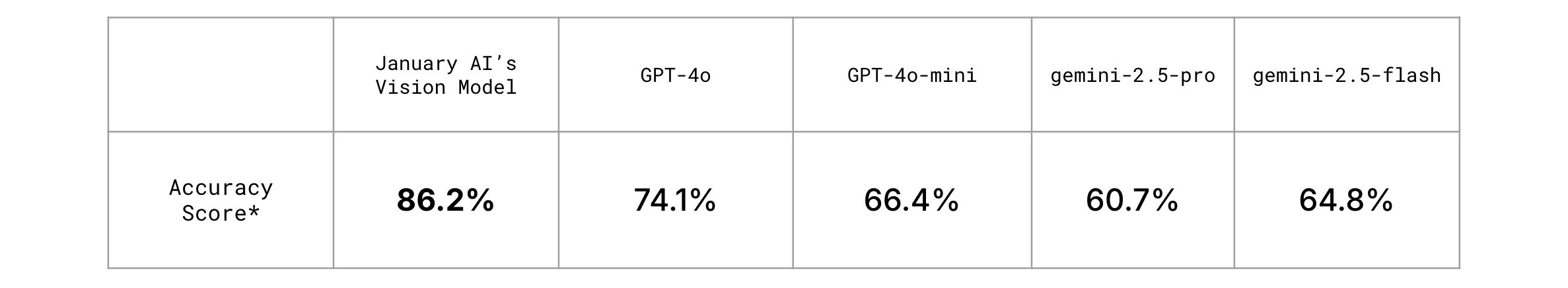

Methodology: We developed a public benchmark testing January AI's Vision model against OpenAI's GPT-4o and Google's Gemini 2.5. Using 1,000 real-world food images with human-reviewed annotations, realistic lighting conditions, camera angles and diverse cuisines, we've shown that our model is the definitive leader in this space and new state of the art.

Performance is ranked by the composite Overall Score. For general VLMs, (Avg) denotes the average of four zero-shot prompts, and (Best) denotes the oracle score from those prompts. Key metrics include Meal Name Similarity, Ingredient F1-Score, Macronutrient WMAPE (↓ better), average response time, and cost per image.

Our results and methods are available on arXiv:

https://arxiv.org/abs/2508.09966

For full transparency, we've published the entire benchmark, code, and data on GitHub: https://github.com/January-ai/food-scan-benchmarks

Our Photo Scan APIs include the following services:

.gif)

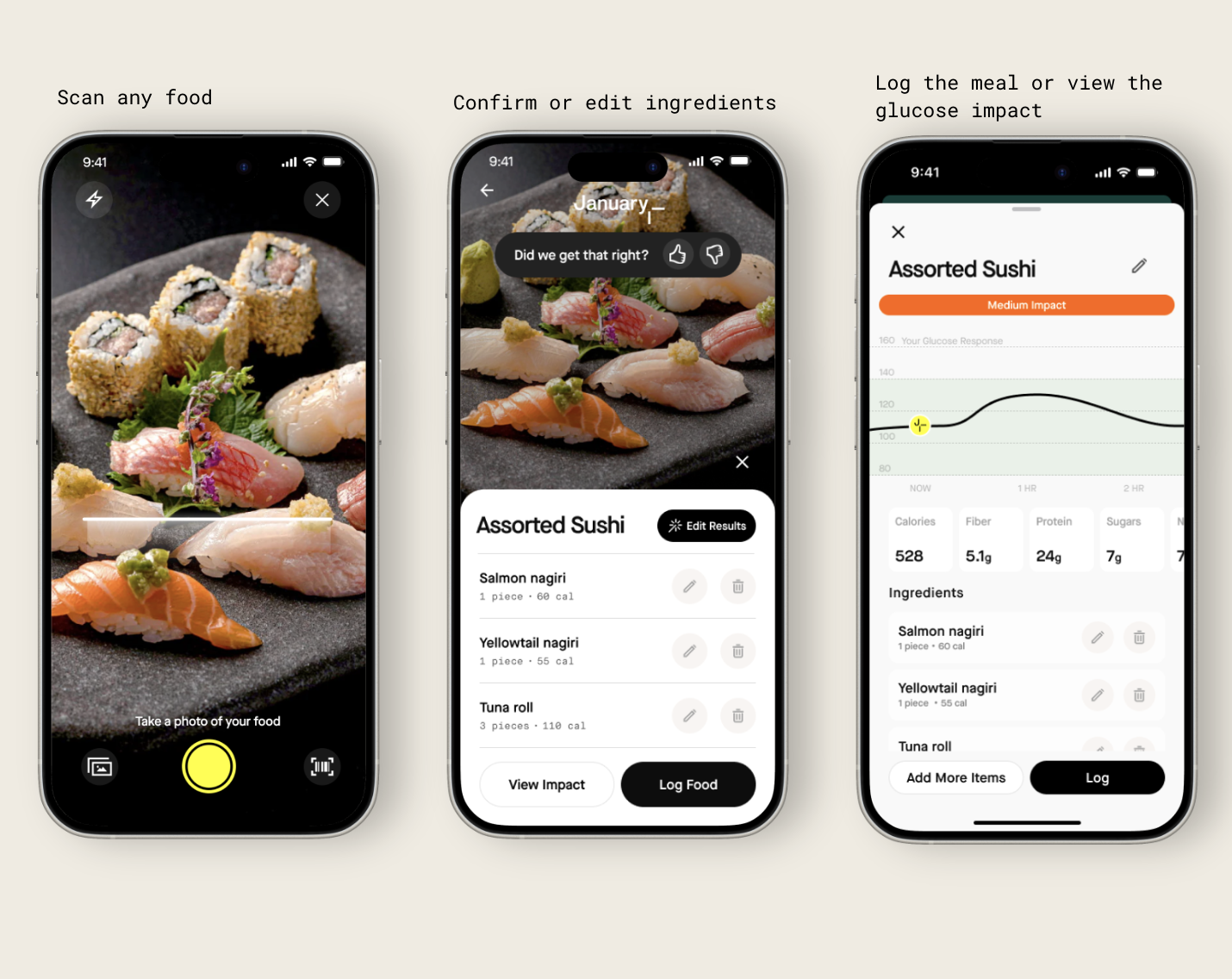

Our photo scanner includes local enhancements for accurate scans and meal naming wherever your users are.

Download the January app for free and start scanning in minutes.